Internationalization & localization

Hello!

I designed this blog to be more serious and formal compared to my first blog. In order to expand my reach, I decided to write articles in English. However, I still find it easier to formulate some parts of the article in Russian and then translate them into English. As a result, after each publication, there is quite a bit of material left in my workshop that could be polished a bit and published, but unfortunately, the technical capability to do so was not available until recently.

So today, I will tell you about how I upgraded my blog to support multiple languages and also share the challenges I encountered.

Theory

A minute of nerdiness, as usual.

Internationalization is the process of designing a software application so that it can be adapted to various languages and regions without engineering changes.

Localization is the process of adapting internationalized software for a specific region or language by translating text and adding locale-specific components.

In this project I did both.

Data

The development (referring to the stage when we've already moved on to writing code) of any feature in my blog starts with defining types. Whether it's component props, DTOs, or database models, all of this is described using TypeScript. This approach ensures a rather obvious effect - when something changes in one place, something else might break elsewhere, and typically, the fix is to repair it in desired way to make the feature work.

I'm developing the blog in a monorepository, which means both the apiweb-clientsharedshared

I use Kysely SQL query builder for database querying. The usage looks like this:

const { id } = await pg

.selectFrom('articles')

.select(['id'])

.where('slug', '=', slug)

.executeTakeFirst();This query will retrieve the article's idslugJOIN or subqueries). Since each controller knows what type it should return to the client, the entire project, despite deploying each application separately, forms a unified system.

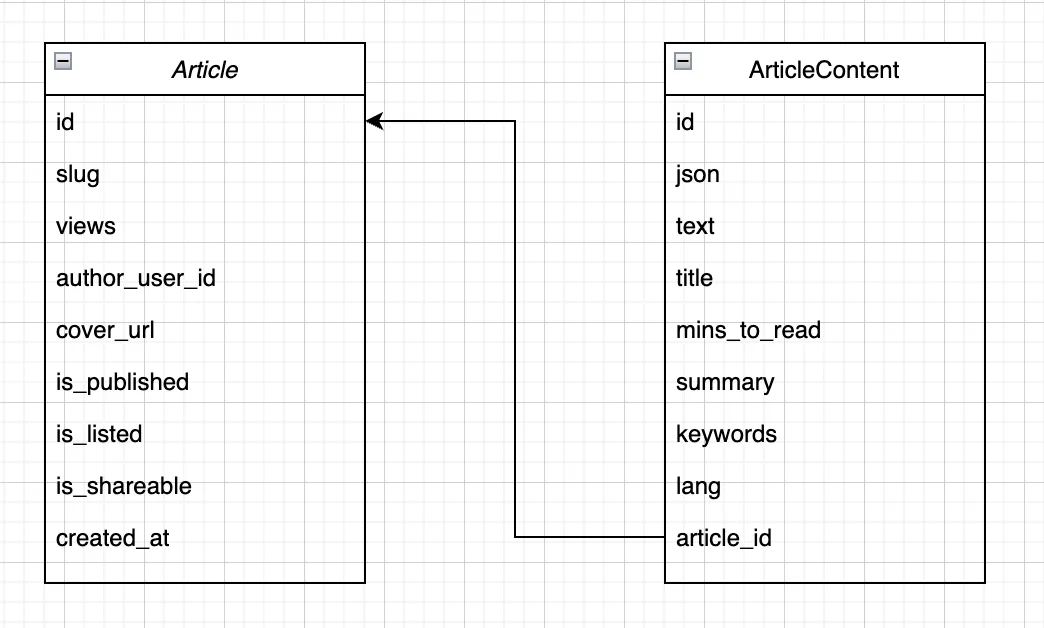

This case was no exception. First and foremost, to support multiple languages, I had to make significant changes to the database structure. Everything that can be localized was moved to a separate table, so in the end, each ArticleArticleContentlangarticle_id

I decided not to create a separate Languageslang field is just a varchar where a two-letter language code (according to the ISO 639-1 standard) is stored. I decided not to do this because adding a language isn't as simple as just inserting a record into the database; that's quite a lot of work, including changes in a source code.

After updating the data schema, almost all controllers depending on it broke. I fixed all the errors and in some places also updated the DTOs. After updating the DTOs, errors started to appear on the client side, and those were also fixed. In fact, the multilingual articles were ready by that time. Although there was still a lot of work ahead in terms of translating the interface in this project, I'll talk about that a bit later.

Font

When I was designing this blog, I wanted it to have a clean and formal appearance, perhaps even resembling a printed publication. I tried out several fonts, and my choice fell on Libre Baskerville.

Baskerville is a serif typeface designed in the 1750s by John Baskerville (1706–1775) in Birmingham, England. Baskerville is classified as a transitional typeface, intended as a refinement of what are now called old-style typefaces of the period.

Libre Baskerville is a web font optimized for body text. It is based on American Type Founder's Baskerville 1941 but has a higher x-height, wider counters and slightly less contrast, making it well-suited for screen reading.

I really like this font, and in my subjective opinion, it suits my blog very well. However, during the design of this feature, it was discovered that Libre Baskerville doesn't support Cyrillic glyphs. This means that when attempting to render text with Russian letters, the font will fallback to a serif font (most likely Times New Roman), and it will look not very good:

Of course, this is unacceptable, so I started looking for ways to solve this problem. I tried about a dozen free fonts similar to Libre Baskerville, but my search didn't yield any success. Then I even thought about purchasing a paid version of the Baskerville font on the Paratype website, for example. I would have to pay $60 for two styles, plus there are additional complexities such as the increasing subscription cost based on pageviews, and a rather strange requirement - "May not use the font to create content by visitors to your websites." This is strange requirement because in my case, it seems like this requirement restricts the use of the font in comment forms, for example.

In general, there were quite a few questionable and unclear points, and on top of that, I would have to pay, and $60 is relatively a lot, roughly equivalent to about half a year's payment for hosting services. That's why I ruled out this option as well.

In the end, there was nothing left to do but take Libre Baskerville (it's distributed under the OFL) and add Cyrillic glyphs to it. I thought it wouldn't be too difficult, especially because some letters look the same as Latin ones and could simply be copied. In reality, it's indeed not very difficult, but it's quite a large and painstaking task. Of course, I understand that I haven't experienced creating a font from scratch. I already had ready guidelines from the authors of Libre Baskerville and even some letters for comparison. Honestly, I think I wouldn't be able to create a font entirely from scratch.

So, slowly but surely, I was drawing letter by letter. In the end, I had to draw 33 * 2 (uppercase + lowercase) * 2 (regular + bold) = 132 letters. Some letters I copied without changes, some I created from others (for example, П can easily be made from Н, and Ж from two Кs). However, some I had to draw almost from scratch (for example, Ф or Л), which explains why those letters turned out slightly more uneven than the rest. Although, to be honest, all the letters turned out a bit uneven, it's still better than falling back to Times New Roman, and overall, I was satisfied with the result.

I used Glyphr Studio, and overall, it's quite a convenient program. However, sometimes it has memory leaks and crashes, so I had to save every time after drawing each letter. Unfortunately, I had to redraw some things.

And to you, dear readers, right now, these two wonderful fonts are entirely available for free (licensed under OFL):

- License

Interface

Dealing with the interface is much simpler. I use react-intl for internationalization. In the code, I use keys, and then, based on the key and the selected language, the appropriate translation is inserted. Additionally, it supports pluralization, value substitution, and formatting for time, dates, numbers, and so on out of the box.

But I want to share my experience, which can be helpful to make work with translations simpler.

The first recommendation is to store translations alongside the component. Let's take the example of the ArticleStats

📦ArticleStats

┣ 📂__Icon

┃ ┗ 📜ArticleStats__Icon.css

┣ 📂__Name

┃ ┣ 📜ArticleStats__Name.css

┃ ┗ 📜ArticleStats__Name.tsx

┣ 📂__Stat

┃ ┣ 📜ArticleStats__Stat.css

┃ ┗ 📜ArticleStats__Stat.tsx

┣ 📂__Value

┃ ┣ 📜ArticleStats__Value.css

┃ ┗ 📜ArticleStats__Value.tsx

┣ 📂ArticleStats.helpers

┃ ┗ 📜capitalize.ts

┣ 📂ArticleStats.i18n <- directory with translations

┃ ┣ 📜ArticleStats.en.json

┃ ┗ 📜ArticleStats.ru.json

┣ 📜ArticleStats.css

┗ 📜ArticleStats.tsxIn the ArticleStats.en.json

{

"ArticleStats.published": "Published",

"ArticleStats.views": "{views, plural, one {view} other {views}}",

"ArticleStats.mins": "{mins, plural, one {min} other {mins}}"

}As a result, all translation files for one component are stored in one place, and they can be easily compiled together using a simple Node.js script. Then, this single large file is read on the server, and all translations for all languages are loaded into memory at once. During SSR (server-side rendering), only the keys for the required language are sent to the client. I want to note that all keys are prefixed with the component's name to reduce the likelihood of collisions.

The second piece of advice I could offer is to restrict the use of anything other than string literals when specifying keys. Let me explain. To retrieve a string in react-intl, you must render the FormattedMessage element:

<FormattedMessage id="ArticleStats.published" />or call the intl.formatMessage

intl.formatMessage({ id: 'ArticleStats.published' })And sometimes I've seen examples with template strings and ternary operators, which actually make things more complicated.

For example, instead of:

<FormattedMessage id={`ArticleStats.${action}`} /> // action: 'published' | 'created' | 'updated'Much better to write:

const actionsMap = {

published: <FormattedMessage id="ArticleStats.published" />,

created: <FormattedMessage id="ArticleStats.created" />,

updated: <FormattedMessage id="ArticleStats.updated" />

};

<div>

{actionsMap[action]}

</div>The code becomes slightly more complex (though not significantly), but now you can search for such calls with regular expressions, not to mention querying the code through the AST (you can experiment with it here by yourself). Static code analysis in this situation is very useful because it allows you to immediately identify which keys are not being used, so you can remove them! The code becomes smaller, bundles become leaner, and there's nothing unnecessary - this is a beauty.

In fact, you can further improve the DX (developer experience) by writing a custom rule for ESLint. It may not be possible to auto-fix it, but correcting such occurrences will likely be straightforward. In most cases, complex expressions like these can be refactored into a hashmap or a switch-case. If you have just two keys, you can continue to use the ternary operator, but do it outside the message descriptor, not inside it.

Algorithm

First and foremost, we check the langAccept-LanguageAccept-Languageen) is chosen.

After determining the language, it's set as a "sticky" choice using a cookie. Afterward, the language can only be changed explicitly by visiting a page with the lang

SEO

A search engine robot should be aware that my website supports multiple languages. When requesting a page without query parameters, GoogleBot (or another search bot) will receive the English version (it doesn't send cookies and the Accept-Language header), which means the algorithm will fallback to en.

To indicate the language of the current page, the lang attribute of the html element is used.

And for links to other pages, link elements with the rel attribute set to alternate are used. It looks like this:

<link rel="alternate" hreflang="en" href="https://vladivanov.me/"> <!-- link to self -->

<link rel="alternate" hreflang="en" href="https://vladivanov.me/?lang=en">

<link rel="alternate" hreflang="ru" href="https://vladivanov.me/?lang=ru">Deploy

Kysely can generate migrations, but I decided to do everything myself. I manually did both the structural changes and data migration right in the production database container using psql😁. First, I created a new table, set up indexes for searching, dumped the Articles table, then deployed the new version of the service, and only after that dropped the old, unnecessary columns. It was a bit nerve-wracking, even though I think I have weekly backups from DigitalOcean. On the other hand, I've never actually tried to restore from them.

The whole feature was introduced under a feature flag, and regular users couldn't use it. After testing and creating editions in Russian for all articles in the admin office, I removed the feature flag. However, I temporarily disabled language detection based on the Accept-Language header.

Now, along with this article, the feature has started working as described. A language switch button has been added to the interface, and a little later, I will set up an email newsletter for subscribers. This is quite a significant milestone in the development history of my blog.

Conclusion

This was an extremely exciting project, considering that it covered all kinds of work. It involved development, database administration, and even design-related aspects like typography. I'm very pleased that my blog now has support of multiple languages, and I'm also happy to share this wonderful experience with you.

Please write in the comments if you're interested in how my blog works; I'm ready to tell you about any part of it.

Thank you for your attention!